Abstract

1. INTRODUCTION

According to a definition issued by the International Hydrographic Organization (IHO), Hydrography is the branch of applied sciences which deals with the measurement and description of the physical features of oceans, seas, coastal areas, lakes and rivers, as well as with the prediction of their change over time, for the primary purpose of safety of navigation and in support of all other marine activities, including economic development, security and defense, scientific research, and environmental protection. (IHO, 2022). In this context, the term bathymetry is also in use. Both words stem from Greek and while hydrography translates to “describing water”, bathymetry means ”measuring depths”. Thus, bathymetry is a more specific term, but both can be used interchangeably in the sense of mapping underwater geometry.

Precise knowledge of the shape and change of underwater topography and objects is the basis for a variety of socio-economic and ecological topics. The former include safety of navigation, flood risk assessment, hazard zone and protection planning, and the latter include restoration of coastal and alluvial areas, monitoring of their state and change, and assessment of the respective effects on aquatic habitats (hydrobiology, habitat modeling on micro- and meso-scale, etc.).

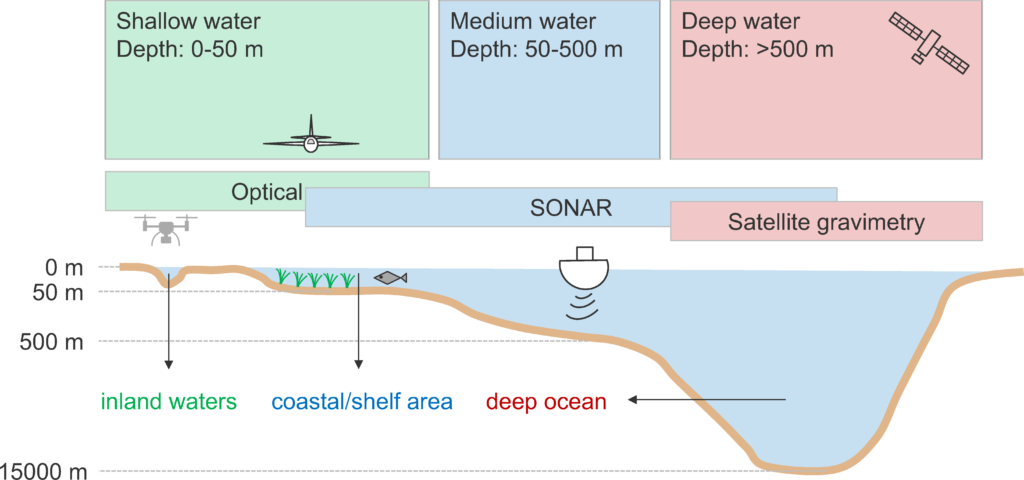

Optical methods are well suited for capturing bathymetry of clear and shallow coastal and inland water bodies with depths <60m from the air but are inappropriate for deeper waters due to the high absorption of light in water. In case the carrier platform is under water, optical methods can also be applied for mapping both the seabed and natural or human-made objects in deep water if the measuring distance is small. Hydroacoustic methods (Sound Navigation And Ranging, SONAR) are the first choice for medium water depths of 20–500m (Lurton, 2010). While SONAR systems can also measure deep water, satellite gravimetry and altimetry provide global coverage of deep ocean areas (Sandwell et al., 2014) with a spatial resolution in the km-range. Figure 1 schematically illustrates the depth categories and the appropriate hydrographic techniques.

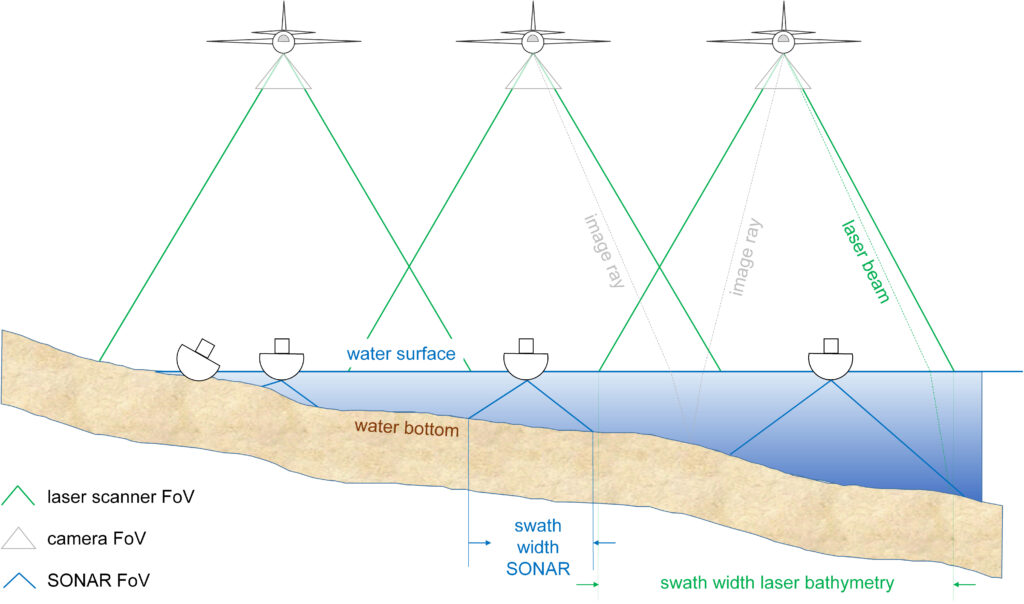

Within the shallow water domain, Figure 1 indicates an overlap between optical methods and SONAR. In fact, SONAR is the state-of-the-art capturing method for navigational purposes of both coastal and inland waterways and outperforms optical methods concerning depth penetration. However, the advantage of airborne optical methods is twofold: (i) the effective SONAR Field-of-View (FoV) drops with decreasing water depth, whereas the swath width mainly depends on the flying altitude and only to a minor extent on the water depth for airborne data acquisition and (ii) shipborne SONAR requires a minimum water depth for safe operation (cf. Figure 2). Furthermore, airborne optical methods provide a seamless coverage from the water bottom via the littoral zone to the dry nearshore area (Guenther et al., 2000; Schwarz et al., 2019; Yang, Qi et al., 2022).

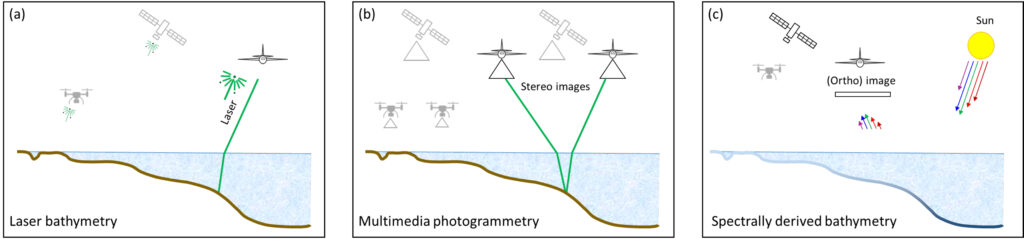

For hydrographic surveys using optical remote sensing, the following methods have become state-of-the-art: (i) spectrally derived bathymetry based on multispectral images (SDB), (ii) multimedia photogrammetry based on stereo images also referred to as photo bathymetry, and (iii) airborne laser bathymetry (ALB) also referred to as airborne laser hydrography (ALH). While the first two methods are passive and use backscattered solar radiation from the bottom of the water body for depth measurements, ALB is an active method based on Time-of-Flight (ToF) measurements of a pulsed green laser. In the past, the above mentioned methods were used separately. However, today a clear trend towards hybrid multi-sensor systems integrating both cameras and laser scanners can be observed (Fuchs and Tuell, 2010; Toschi et al., 2018; Legleiter and Harrison, 2019). Figure 3 shows a schematic diagram of the three main optical methods in hydrography.

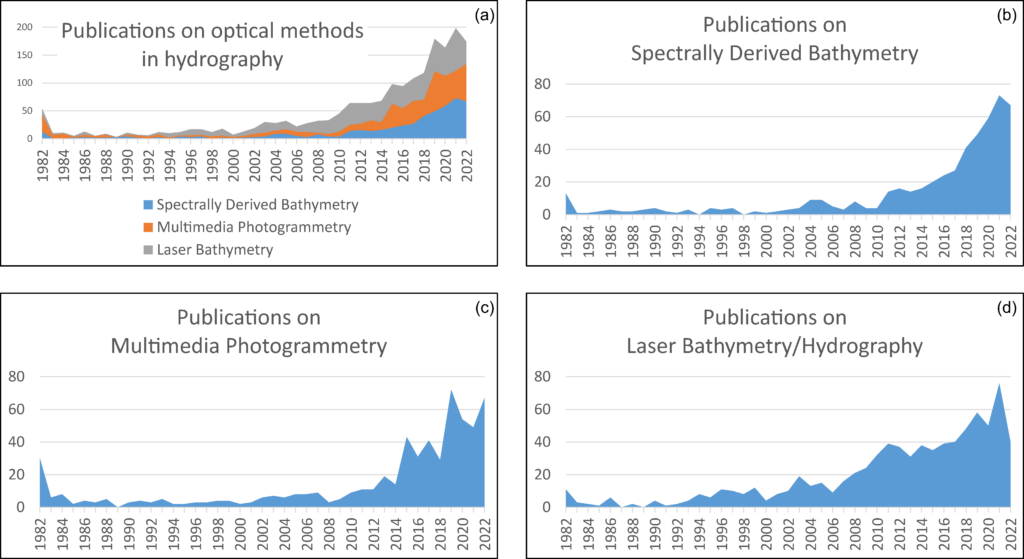

The seminal paper on multimedia photogrammetry dates back to 1948 (Rinner, 1948). The first applications of the laser (light amplification by stimulated emission of radiation) invented in the 1960s were finding sub-marines (Sorenson et al., 1966) and mapping near-shore bathymetry (Hickman and Hogg, 1969). Not much later, Poicyn et al. (1970) described multispectral approaches to derive water depth from satellite images. Thus, all the known passive and active optical hydrography methods have been introduced before 1970 and we are already looking back at 50 years of history in optical hydrography. Today’s science community can built upon a considerable amount of seminal research on subject matters and developments concerning sensors, platforms and processing strategies are still boosting the field. This can clearly be seen by the increasing number of publications in the recent years. The results of a bibliographic query in the citation database Scopus using the keywords spectrally derived bathymetry, multimedia photogrammetry and laser bathymetry (including similar terms like photo bathymetry, bathymetric LiDAR (Light Detection And Ranging), satellite bathymetry, etc.) are plotted in Figure 4.

query in the Scopus citation database.

The results are presented as a summary of all optical methods (Figure 4a) and separately for SDB (Figure 4b), photo bathymetry (Figure 4c) and laser bathymetry (Figure 4d).

The small peak at the beginning of the timeline in 1982 results from aggregating earlier contributions into the first bin. Apart from that, it can clearly be seen from Figure 4a that until around the year 2000 there were less then 20 papers published per year. Following a first smaller peak around 2004 with approximately 30 articles per year, a continuous increase started from 2010 on. On a particular level, it is interesting to note that more multimedia photogrammetry articles were published in the early days (before 1982, cf. left peak in Figure 4c). The likely reason for that is the earlier availability of photographic (stereo) cameras compared to multispectral satellites and bathymetric laser scanners. For SDB, it is noted that Landsat Level-1 multispectral images as well as Level-2 and Level-3 science products have been made available for download from the U.S. Geological Survey (USGS) archive at no charge from 2008 on, which entailed a continuous rise of SDB-related publications starting in 2010 (cf. Figure 4b). For laser bathymetry (Figure 4d), a considerable peak can be seen in 2011 following the introduction of topo-bathymetric laser scanners, which aimed at providing high resolution for shallow water areas compared to the traditional low resolution scanners optimized for depth performance.

The prominent peaks in 2014 and 2019 in Figures 4c and 4d, respectively, can be related to the increasing availability of UAV-borne (uncrewed aerial vehicle) imaging sensors. The stimulating impact of UAVs as carrier platforms is a general trend for photogrammetric mapping and 3D object reconstruction (Colomina and Molina, 2014). With respect to hydrographic applications (Yang, Yu et al., 2022), it is noted that the rise came again earlier for multimedia photogrammetry, as cameras are much lighter and it took longer to develop compact and lightweight scanners. However, advances in sensor and platform development are not the only driving force in this field; increased computing power has also enabled the use of machine learning techniques in geoscience in general (Dramsch, 2020) and in optical hydrography in particular (Al Najar et al., 2021; Moran et al. 2022).

When compared to a search with the general keywords hydrography or hydrographic (not shown in Figure 4), it is striking that until the year 2010 the percentage of scientific articles on optical bathymetry is only around 10%. However, from 2010 on, the percentage rises to a level of more than 40% in 2021. This is another indication that optical methods are gaining importance in the recent past.

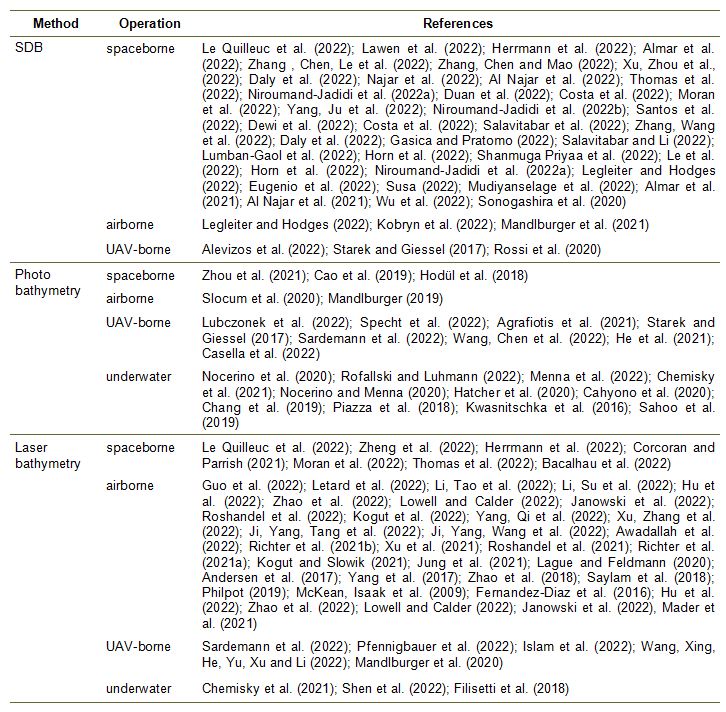

In general, Figure 4 reveals that within the last four decades the publication activity has increased by an order of magnitude with around 200 articles in 2020 and the trend is still unbroken as the apparent decline in 2022 is attributed to a conservative estimate for the 2nd half of 2022. Table 1 contains a selection of recent publications related to optical methods in hydrography highlighting the relevance of the topic. It can be seen from both Table 1 and Figure 4 that all three main fields (SDB, photo and laser bathymetry) are equally vital in the sense of publication activity and no distinct priority of a certain method is observable in general. However, Table 1 clearly reveals that SDB methods are mainly used with satellite images, laser bathymetry is predominantly employed from crewed aircraft, and multimedia photogrammetry is either performed from UAV or underwater platforms. The high number of publications underlines the importance of current research, especially against the background of climate change and its effects, such as the increase in flood disasters on the one hand and increasing water scarcity and droughts on the other (Kreibich et al., 2022).

The objective of this paper is to provide an overview of methods, sensors, platforms, and applications of optical measurement techniques for hydrographic mapping, focusing on through-water techniques, i.e., applications where the sensor is located above the water surface and measurements are performed through the air-water interface. The article is structured as follows: The first part of the paper introduces the principles of the three main optical methods in Section 2 (SDB), Section 3 (photo bathymetry), and Section 4 (laser bathymetry). Each section discusses the basics of the respective method but also addresses open research areas. The second part of the paper focuses on sensors and platforms in Section 5 and presents selected applications in Section 6. The article ends with a summary and concluding remarks in Section 7.

2. SPECTRALLY DERIVED BATHYMETRY

In spectrally derived bathymetry (SDB), a relationship is established between the radiometric image content and the water depth (Poicyn et al., 1970; Lyzenga, 1978; Philpot, 1989; Maritorena et al. 1994). The prerequisite for this is a thorough understanding of the complex interaction of solar radiation with the atmosphere, the water surface, the water body and finally the bottom of the water body as a function of the wavelength λ. In general, two approaches are used for deriving bathymetry from the radiometric image content: (i) physics-based methods (cf. Section 2.1) and (ii) regression based approaches (cf. Section 2.2). The latter requires independent reference data to train models describing the color-to-depth relationship for which purpose machine learning techniques play an important role in the modern literature (cf. Section 2.3).

2.1 Physics-based approach

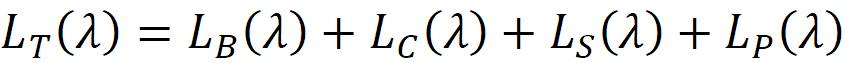

The total radiance arriving at the image sensor can be written as the sum of individual partial contributions (Legleiter et al., 2009):

(1)

Figure 5 illustrates Equation 1, in which the total radiation LT incident at the sensor consists of the radiation LB reflected from the bottom of the water body, the radiation LC backscattered from the water body or water column, respectively, the signal component from reflections at the water surface LS and components from backscattering particles in the atmosphere LP. Figure 5 shows the relationships schematically. The signal attenuation within the water column is exponential as a result of continuous forward and backward scattering as well as signal absorption in the water column. The signal contribution LB from the bottom depends on both water depth and bottom properties (reflectance, roughness). The bottom reflectance considerably varies for different soil types ranging, e.g., from 0.1 to 0.3 for wet limestone and from 0.03 to 0.06 for wet gravel within the entire visible spectrum of 400–800nm (Legleiter et al., 2009). However, the spectral differences of the bottom play a major role only in very shallow water, since in deeper water the attenuation by the water column predominates (Röttgers et al., 2014; Pope and Fry, 1997).

The contribution from the water column LC is determined by the water’s optical properties. The contributing factors are once more absorption and scattering by pure water, but also turbidity caused by suspended sediment and organic matter (Grobbelaar, 2009). Depending on the viewing direction, the term LS may account for a large fraction of the total signal LT due to specular reflection at the water surface. Such specular reflections occur when the sunlight is directly reflected from the water surface into the FoV of a sensor pixel. Depending on the motion of the water surface (waves), specular pixels occur either sporadically or in clusters. In any case, the corresponding image areas must be masked during data preprocessing and deactivated for further processing. To a certain degree, the influence of sun glint can be mitigated based on the infrared channels of a multispectral image as described by Kay et al. (2009). Finally, LP represents path radiance backscattered from the atmosphere into the sensor’s FoV (Zhao et al., 2012; Stumpf and Pennock, 1989).

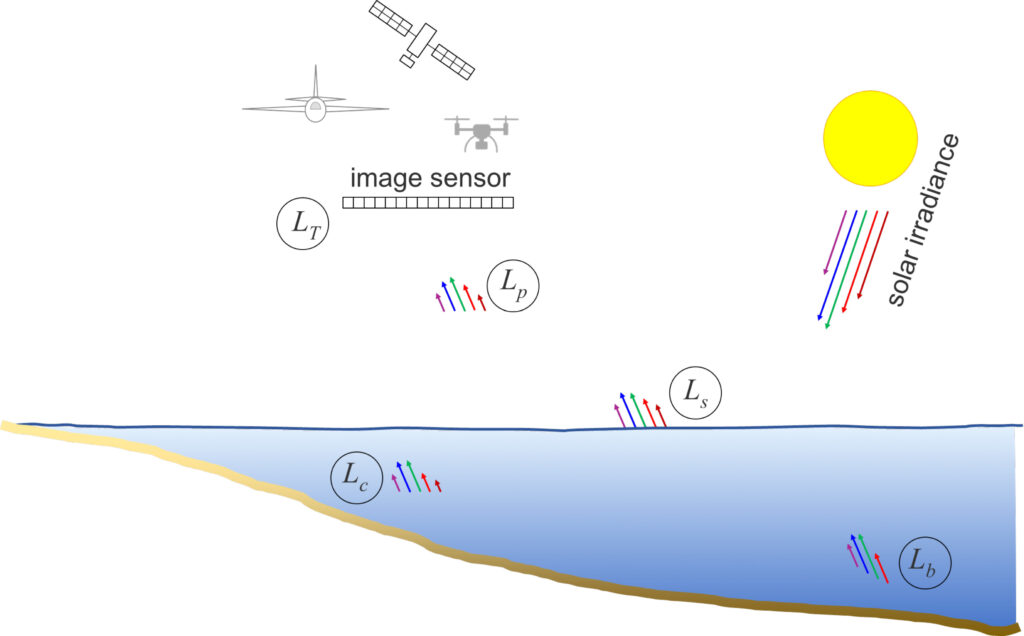

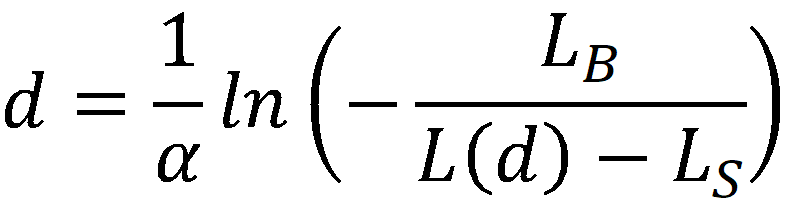

For images containing optically deep water, a simple physical relationship between water depth and backscatter strength can be formulated (Lyzenga et al., 2006):

(2)

L(d) is the radiation received at the sensor after correction for atmosphere and any specular highlights (sun glint). The LS term in Equation 2 includes reflections from the water surface as well as backscatter from an infinitely deep water column. LB primarily describes the reflectivity of the bottom, but also includes transmission losses passing through the air-water interface and effects of volume scattering in the water body. The exponential coefficient α is the effective attenuation coefficient and consists of the sum of forward and backward scattered light components.

Equation 2 shows the exponential signal decay as a function of the water depth d and the water body’s optical properties (α) already mentioned above. By taking the antilogarithm, a linear relationship between the water depth and the radiometric image content can be established and the water depth d can be calculated directly by a simple transformation.

(3)

Thus, assuming that both the water conditions and the subsurface are homogeneous, the depth can already be determined from a single spectral image channel without the presence of external reference data. However, since both signal absorption in the water column and bottom reflectance depend on wavelength, in practice multiple radiometric bands of multispectral images are used to determine the unknown parameters remaining in Equation 3. This is usually done as part of an optimization task based on reference data from terrestrial or echosounder surveys (Lyzenga et al., 2006).

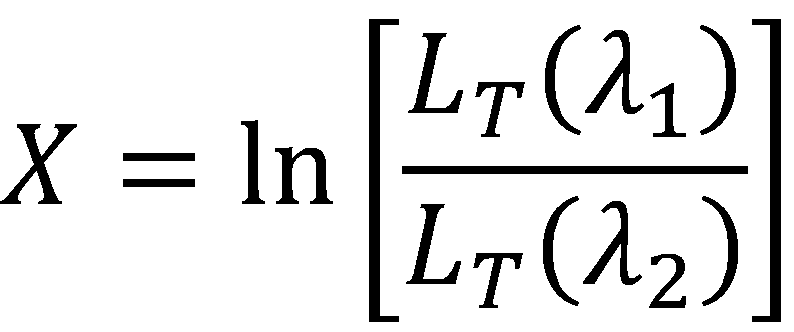

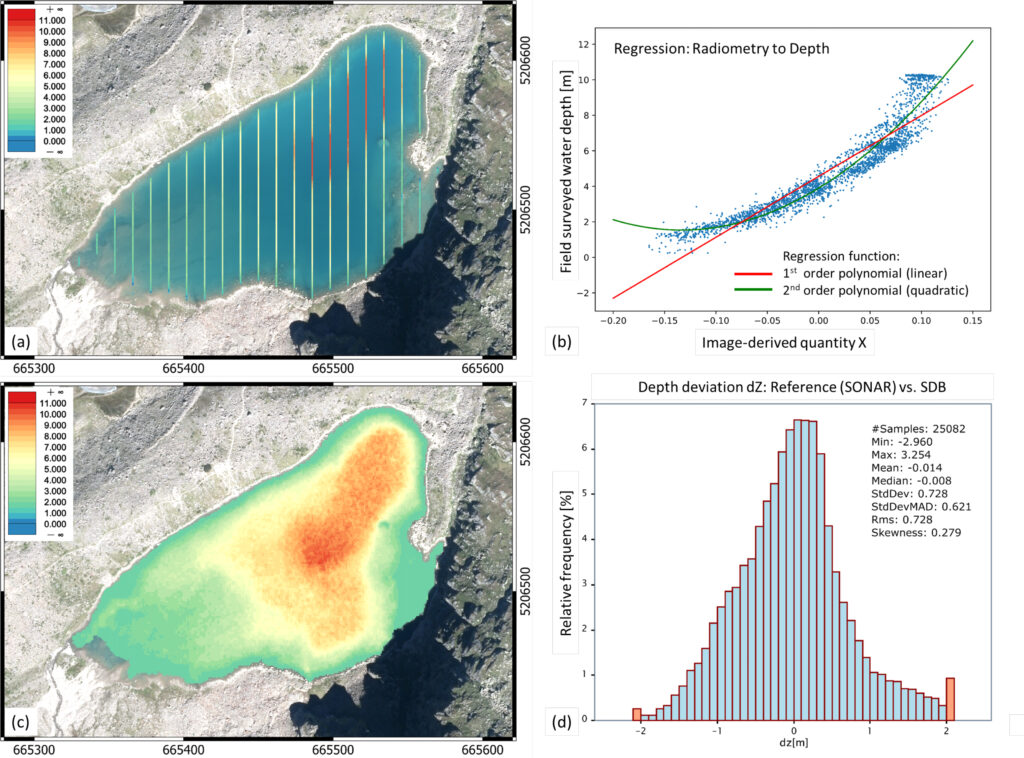

2.2 Regression based approach

While Equations 2 and 3 represent a physically motivated model, one of the disadvantages is that LB depends on both water depth (d) and bottom reflectance (RB) and thus both effects are interlaced. An approach to overcome this limitation was introduced in Stumpf et al. (2003) by calculating the ratio of two spectral bands with different wavelength or index, respectively, which was found to be approximately constant and thus to a certain extent independent from variations of bottom reflectance. Still it assumes the presence of optically deep regions, i.e. areas where LB is entirely dominated by the backscatter of the (endlessly deep) water column and does not contain any signal from the bottom. However, e.g. for mapping clear and moderately deep alpine rivers or shallow coastal areas like coral reefs with UAV images (Alevizos et al., 2022), this is not necessarily the case. In the absence of optically deep water, still the deepest part of the scene can replace LB at d=∞ as a pragmatic solution (Legleiter, 2016). A more generic solution is to apply the log-transformed band ratio (Stumpf et al., 2003) and to identify the optimum band combination in an approach referred to as Optimum Band Ratio Analysis (OBRA; Legleiter et al., 2009). This results in an image-derived quantity X:

(4)

which is approximately linearly related to the water depth d. The empirical relationship between X and d is finally established by regressing reference depths from field surveys against X. A linear regression function will be sufficient, if Equation 4 sufficiently accounts for all non-linear effects, otherwise higher order functions (e.g., polynomials of degree n) can also be used, as in the example shown in Figure 6, where depth profiles of a mountain lake in Tyrol, Austria were surveyed with a compact SONAR system and an areal depth map was created via SDB using the OBRA approach based on aerial RGBI images.

2.3 Machine learning in SDB

In addition to the well established physics- and regression-based depth inversion methods discussed above, machine learning (ML) approaches have gained increasing attention for various geoscience applications in general (Dramsch, 2020) and SDB in particular (Al Najar et al., 2021) in the recent decade. While a core strength of ML is classification, especially convolutional neural networks (CNN) have proven to be effective depth predictors due to ability of convolutions to approximate arbitrary functions (Zhou, 2020; Murtagh, 1991). This is particularly important in the context of SDB because the interaction of light with the water surface, water column, and water bottom is complex, and simple models that focus on hand-crafted features such as specific log-transformed band ratios may not be able to capture the full complexity of spectral image information.

Among the variety of classical ML approaches, simple artificial neural networks (Makboul et al., 2017), k-nearest neighbor regression (Legleiter and Harrison, 2019), random forest (Sagawa et al., 2019; Yang, Ju et al., 2022), gradient boost (Susa, 2022), multilayer perceptrons (Duan et al., 2022), back propagation neural networks (Wu et al., 2022), ensemble learning (Eugenio et al., 2022) and support vector machines (Misra et al., 2018) have been successfully applied for deriving bathymetry from multispectral images. Beyond that, a current trend towards deep neural networks can be observed. The employed methods include simple neural networks (Niroumand-Jadidi et al., 2022a,b), locally adaptive back-propagation neural networks (Liu et al., 2018), recurrent neural networks (Dickens and Armstrong, 2019), deep learning based image super-resolution (Sonogashira et al., 2020), and CNNs (Al Najar et al., 2021; Mandlburger et al., 2021; Lumban-Gaol et al., 2022; Ji, Yang, Tang et al., 2022; Mudiyanselage et al., 2022). It is noted that the application field is not restricted to coastal areas but also includes inland waters like rivers and lakes (Legleiter and Harrison, 2019; Mandlburger et al., 2021).

3. MULTIMEDIA PHOTOGRAMMETRY

A complementary image-based method for depth determination is multimedia photogrammetry. This is a purely geometric method, the fundamentals of which date back to the mid of the 20th century (Rinner, 1948). The technique is applied to capture the underwater topography of hydrodynamic lab facilities based on images from terrestrial or crane-mounted cameras, but is also used to survey rivers based on crewed and uncrewed aerial images or even high-resolution stereo satellite images. The fundamentals are reviewed in the following subsections.

3.1 Two-media case

With the advent of digital photogrammetry (Förstner and Wrobel, 2016) and automated evaluation methods from the field of Computer Vision like Structure from Motion (SfM) (Schonberger and Frahm, 2016) and Dense Image Matching (DIM; Hirschmuller, 2008; Haala and Rothermel, 2012; Rothermel et al., 2012; Wenzel et al., 2013), the topic of photogrammetric depth determination for capturing topographic data from stereo images has received increased attention. This also applies to multimedia photogrammetry. In case the sensor is in the air or on ground and the surveyed objects and surfaces are submerged, this results in a two-media problem also referred to as through-water photogrammetry.

Modern literature discusses stereo image-based acquisition of underwater topography for running waters (Butler et al., 2002; Westaway et al., 2001; Dietrich, 2016) and for coastal areas based on stereo images captured with drones, crewed aircraft and satellites (Hodül et al., 2018; Cao et al., 2019; Agrafiotis et al., 2020; Wang, Chen, et al., 2022).

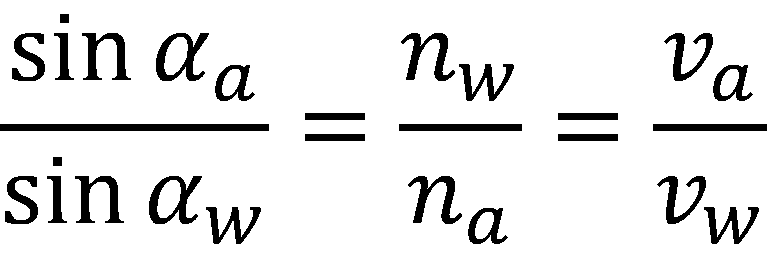

Building on the basic concept of photogrammetry (Kraus, 2007; Förstner and Wrobel, 2016), underwater topography can be derived from stereo images provided that the inner and outer orientation of the images is known (Mulsow, 2010) and the water surface can be reconstructed with sufficient accuracy (Zou et al. ,2016; Engelen et al., 2018). Image orientation is facilitated in the through-water case if the water body is small and does not cover the entire image area. If enough tie points are available on dry ground, standard techniques for image orientation (Förstner and Wrobel, 2016; Kraus 2007) can be used. In all other cases, a strict consideration of the refraction effect is required not only for generating dense underwater point clouds, but also for matching and reconstruction of tie points used for image orientation (Maas, 2015; Mulsow, 2010). Once the interior and exterior orientation of the images are resolved and homologous points of the water bottom can be identified in at least two images, the apparent intersection of the corresponding image rays still needs to be corrected for refraction at the water surface (Luhmann et al., 2019). The basis for this is Snell’s law of refraction:

(5)

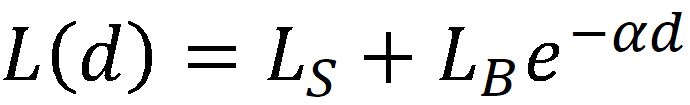

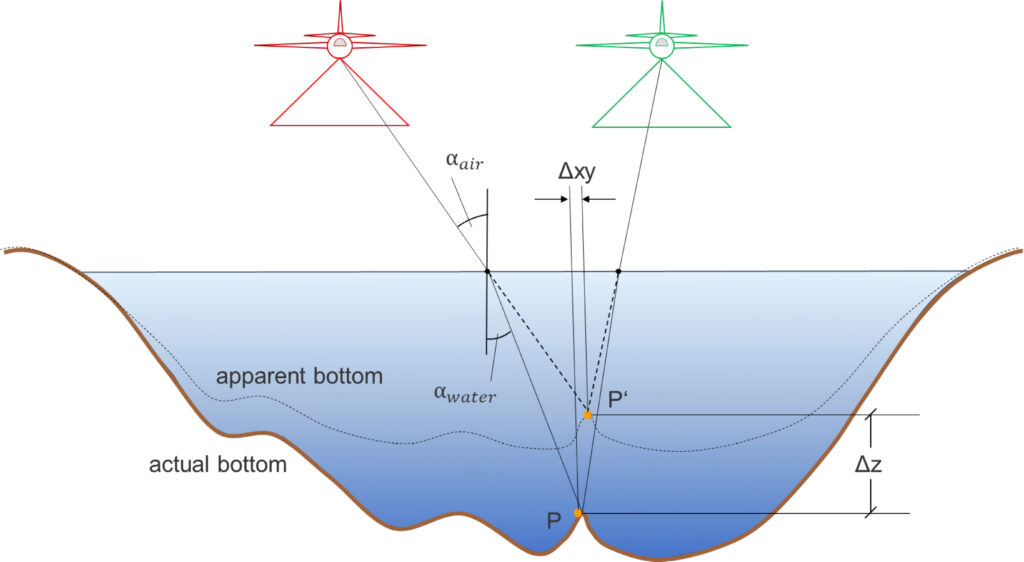

Equation 5 reveals that the sines of the air- and water-side image rays (αa and αw) are inversely proportional to the respective refractive indices in water (nw≈1.33) and air (na≈1.00) and directly proportional to the propagation velocities (va≈300.000km/s, vw≈225.564km/s). Figure 7 shows the refraction of the image rays toward the perpendicular during the transition from the optically thinner medium of air to the optically denser medium of water. As a result, the apparent image point P’, which results from the straight-line intersection of the image rays, tends to be too high and must be corrected downward by applying the refraction correction to the actual point P at the bottom of the water. Detailed descriptions of refraction correction can be found in Kotowski (1988) and Murase et al. (2008). With respect to bundle block adjustment, Maas (2015) and Kahmen et al. (2020) specify formulae to explicitly integrate refractive interfaces into the photogrammetric processing pipeline.

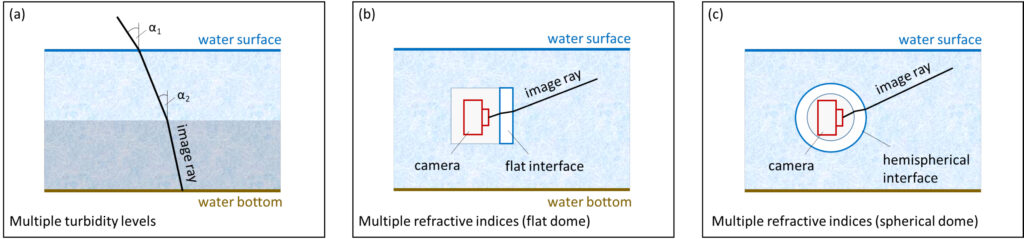

3.2 Multiple refractive interfaces

While the water surface is the only refractive interface in the simple two-media case, multiple refractive interfaces may be present in other situations. This applies to through-water photo bathymetry in case there are multiple layers with different refractive indices within the water body, but also when the sensor is underwater and the camera is sheltered against water ingress by a surrounding housing using either flat or dome ports. In the latter case, the material of the housing exhibits a certain thickness depending on the pressure it has to withstand where the image rays are refracted both when entering and exiting the shield (cf. Figure 8).

Maas (2015) describes a rigorous geometric correction model for the multi-media case, which is generic enough to serve as the basis for integration into standard photogrammetric bundle block adjustment software. This paper also discusses the accuracy potential of multimedia photogrammetry and highlights the impact of image network geometry, interface planarity, refractive index variations and dispersion as well as diffusion effects under water resulting in an accuracy degradation of about a factor two under relatively favorable conditions.

Menna et al. (2016) investigate the use of consumer grade cameras in combination with hemispherical dome ports for deriving accurate underwater 3D point clouds. The same authors published a review on flat and dome ports, discuss their pros and cons and conclude that hemispherical dome ports outperform the simpler flat ports both with respect to the residuals in image space as well as concerning precision and accuracy of the derived 3D object points (Menna et al., 2017a). It is noted that theoretically, if the camera’s projection center is located exactly in the center of a hemispherical dome and all image rays are perfectly radial, no refraction would occur at the dome’s interface. However, as the projections center is located behind the lens system of the camera, refraction also occurs when using hemispherical domes (cf. Figure 8c).

3.3 SfM and Dense Image Matching

With the advent of Computer Vision-based techniques like SfM (Schonberger and Frahm, 2016) and Dense Image Matching (Hirschmuller, 2008) in modern digital photogrammetry (Förstner and Wrobel, 2016), many photogrammetric software solutions surfaced implementing the full processing pipeline from image orientation and camera calibration, via the creation of 3D point clouds to final model derivation (3D meshes, Digital Surface Models). The most prominent commercial products are Metashape (Agisoft, 2022), Pix4D Mapper (Pix4D, 2022), the Match-T/

Match-AT/OrthoMaster/UAS-Master software suite (Trimble, 2022a), CapturingReality (Trimble, 2022c), PhotoModeller (Trimble, 2022b) and SURE (nFrames, 2022) as well as their open source counterparts like MicMac (Rupnik et al., 2017), Bundler (Bundler, 2022), and VisualSFM (Wu, 2022), to mention just a few. All these products can generally also be used for photo bathymetry (Burns and Delparte, 2017).

In general, two major steps can be identified in any photogrammetric processing pipeline: (i) image orientation and camera calibration and (ii) point cloud generation via feature based, area based or dense matching approaches. The prior (i.e. image orientation) has already been discussed in Section 3.1. Concerning dense surface reconstruction, the derivation of topographic point clouds can be considered mature, but there are still open research questions in photo bathymetry (Mandlburger, 2019). This especially holds for non-static water surfaces in the through-water case. While in the topographic case, more stereo image partners generally increase the accuracy of the resulting point cloud, direction-dependent image ray refraction can possibly lead to a deterioration of the results in photo bathymetry. The problem of varying refraction effects for every point/camera combination in a SfM point cloud is also addressed in Dietrich (2016). The author presents a multi-camera refraction correction and reports accuracy in the order of 0.1% of the flying altitude, i.e., 4cm at a flying altitude of 40m above ground level. Starek and Giessel (2017) propose a denoising method to filter underwater SfM points and combine multimedia photogrammetry and spectral depth inversion to generate seamless underwater models. For the underwater case, the topic of dense object reconstruction is discussed by Hatcher et al. (2020) for mapping coral reefs using a towed surface vehicle with an onboard survey-grade Global Navigation Satellite System (GNSS) and five rigidly mounted downward-looking cameras with overlapping views of the seafloor providing sub-centimeter resolution.

3.4 Machine learning in photo bathymetry

It already became clear in Section 2.3 that ML approaches play an important role in SDB. This is much less the case for multimedia photogrammetry as the technique is purely geometric and for most problems like pose estimation and point cloud generation deterministic approaches exist. However, the difficulty to capture and model the dynamic water surface in sufficient temporal and spatial resolution opens the floor for applying ML-based techniques for refraction correction. Agrafiotis et al. (2019) introduced a support vector regression (SVR) model (DepthLearn), which was trained using reference depths captured with bathymetric LiDAR. The authors showed that the SVR model can successfully compensate the systematic underestimation of the raw SfM-derived water depths. In a follow-up work, Agrafiotis et al. (2020) apply the DepthLearn model to correct refraction in image-space. With the resulting refraction-free images, dense image matching pipelines based on multi-view stereo delivers unbiased bathymetric maps. The accuracy of the ML-based refraction correction could be further improved by using synthetic data for model training (Agrafiotis et al., 2021). This was shown for aerial images from both crewed and uncrewed platforms.

4. LASER BATHYMETRY

In contrast to the passive methods described in Sections 2 and 3, laser bathymetry in general and airborne laser bathymetry (ALB) in particular represents an active technique for mapping shallow waters using a pulsed green laser (Philpot, 2019; Guenther et al., 2000). The basics and selected specific aspects of laser bathymetry are detailed in the following subsections.

4.1 ALB basics

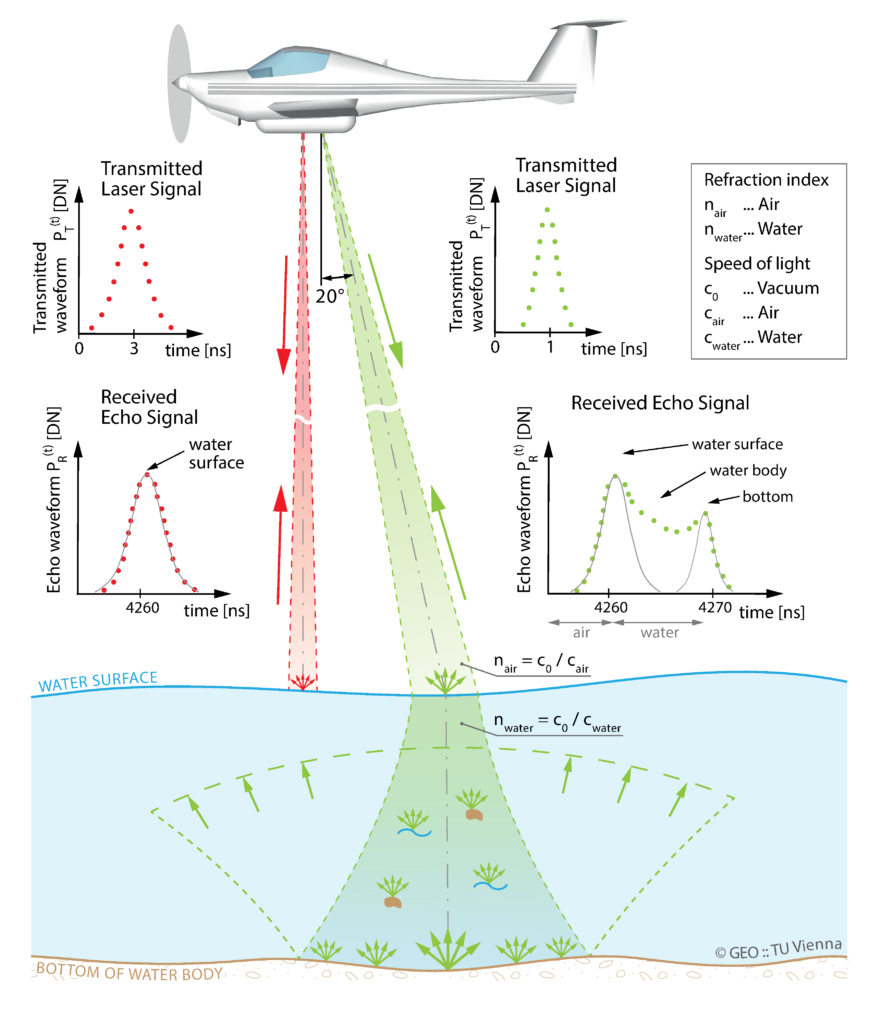

In ALB, the distance between sensor and target is determined by measuring the round-trip travel time of a very short laser pulse (wavelength λ = 532nm, pulse duration ∆t=1–10ns) through air and water (Guenther et al., 2000). The measurement process is schematically sketched in Figure 9.

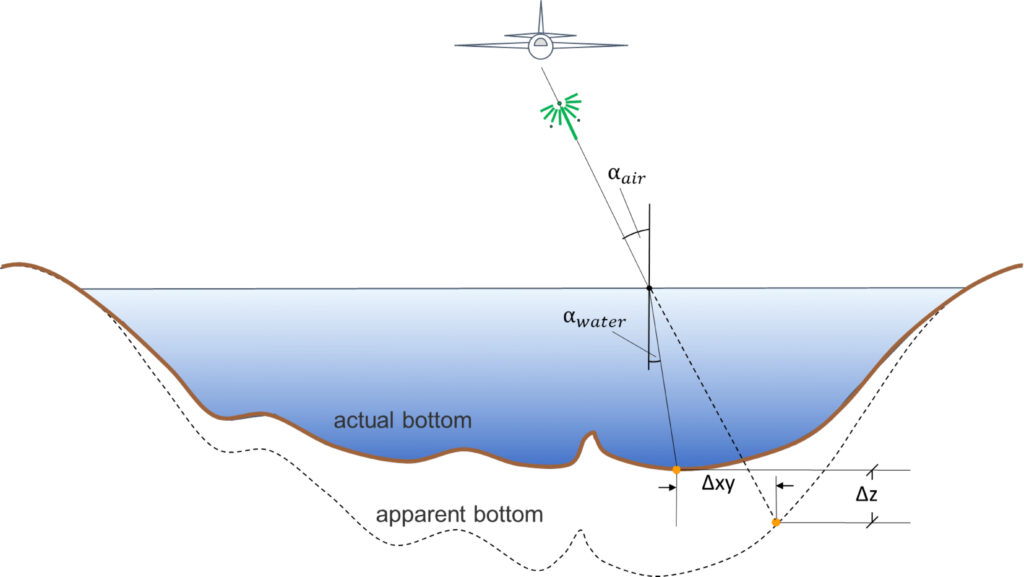

After pulse emission and traveling through the atmosphere, the laser beam is partially reflected at the water surface and the remaining part penetrates into the water body. Upon entering the water column, the laser beam changes both its direction depending on the waters’ optical properties and its propagation speed according to Snell’s law of refraction (cf. Equation 5, vW≈vL/1.33≈225.564km/s). Due to the lower speed of light in water, the uncorrected 3D underwater measurement points appear too deep in contrast to multimedia photogrammetry, and must be corrected upward accordingly (cf. Figure 10).

In the water column, the laser radiation is attenuated by continuous beam refraction and signal absorption, so that after reflection of the laser pulse at the bottom and the corresponding return path, only a small part of the laser energy arrives at the sensor. Thus, all bathymetric sensors employ very sensitive detectors (Quadros, 2013; Mandlburger, 2020). The general relationship between transmitted and received energy is described by the laser-radar equation (Wagner, 2010), which for bathymetric applications is divided into the signal components from the water surface, the water column, the bottom of the water body, and background radiation including losses in the atmosphere (Abdallah et al., 2012; Tulldahl and Steinvall, 2004).

(6)

Equation 6 has the same form as Equation 1. The signal losses in laser bathymetry are thus equivalent to those already described in Section 2 for SDB. This also holds for the exponential attenuation in the water column. A key advantage of laser bathymetry is that the signal attenuation, usually described by the effective attenuation coefficient k, can be estimated from the asymmetric shape of the recorded waveforms (Richter et al., 2017; Schwarz et al., 2017). There is a direct relationship between the attenuation coefficient k and the Secchi depth sd (sd≈1.6/k). The Secchi depth is an empirical measure for water turbidity and refers to the distance beyond which the black and white quadrants of a 20cm-diameter disk released into the water from a boat on a rope can no longer be distinguished from each other (Effler, 1988). The manufacturers of bathymetric sensors usually describe the depth measurement performance in multiples of the Secchi depth (Quadros, 2013).

4.2 Water surface detection and refraction correction

The detection of the water surface is a prerequisite for precise refraction and run-time correction of the raw measurements. Most bathymetric scanners operate an additional near infrared channel (λ=1.064nm) together with the green laser for this purpose, since signal absorption in water is very high for near-infrared (NIR) radiation, and it therefore penetrates only minimally into the water column (Guenther et al., 2000; Ewing, 1965). If no NIR channel is present, the air-water interface needs to be modeled from the green channel reflections alone. Since in this case, the echoes from the water surface often represent a mixture of direct reflection and volume scattering in the first cm of the water column (Guenther et al., 2000), special evaluation and modeling methods are required. Especially for topo-bathymetric scanners with small laser footprint, the non-planarity and dynamics of the water surface (waves) have to be considered to obtain precise 3D point coordinates of the water bottom (Westfeld et al., 2017).

The employed approaches include statistical methods, which aggregate neighboring (near) surface echoes to define the water level height (Mandlburger et al., 2013; Mandlburger and Jutzi, 2019), methods based on clustering with connectivity constraints (Roshandel et al., 2021), and strict mathematical modeling of the entire underwater laser path resulting in water surface height estimates for each laser pulse (Schwarz et al., 2019). Next to detecting distinct surface echoes in the laser measurements, it is equally important to reconstruct the water surface as a continuous surface providing both elevation and slope at arbitrary positions. For this purpose, raster models (Mandlburger et al., 2013), triangular irregular networks (Ullrich and Pfennigbauer, 2012) or free-form surfaces (Richter et al., 2021a) are in use.

Once the water surface is reconstructed, refraction and signal run-time correction can be performed. In the modern literature, Xu et al. (2021) present a method for strict correction of sea surface wave-induced refraction errors for each bottom point by calculating the water surface normal directions for each laser pulse entering the water body. To calculate the air-side angle of incidence αa used in Equation 5, both the known direction vector of the laser beam and the normal direction of the water surface are required. From this, the resulting water-side angle can then be calculated if the refractive indices of air and water (na, nw) are known. A similar approach, also considering water surface slopes, is proposed by Yang et al. (2017) based on sea surface profiles and ray tracing. Schwarz et al. (2021) highlight the difference of phase and group velocity, which was long neglected in laser bathymetry. While the phase velocity (i.e., speed of laser light @532nm) is decisive for beam deflection, the group velocity (i.e., speed of laser pulse) must be used for the ToF correction.

All the mentioned aspects as well as sensor orientation and calibration contribute to the total error budget of laser bathymetry. Applying rigorous error propagation, Eren et al. (2019) published a model for estimating the Total Vertical Uncertainty (TVU) of bathymetric LiDAR.

4.3 Full waveform analysis

Unlike topographic laser scanning, where simple time-to-digital converters can be used to measure the round-trip time of a laser pulse (Ullrich and Pfennigbauer, 2016), bathymetric LiDAR requires digitization of the entire backscatter waveform, referred to as full waveform (FWF), due to the complex interaction of the laser pulse with the water medium (Guenther et al., 2000). FWF processing can either be done online during the flight (Pfennigbauer and Ullrich, 2010; Pfennigbauer et al., 2014) or off-line, if the waveforms are additionally stored on hard disk. Comparisons of different techniques for processing laser bathymetry waveforms were published by Wang et al. (2015) and Allouis et al. (2010). The standard methods include peak detection, average square difference function, Gaussian decomposition, quadrilateral fitting, Richardson–Lucy deconvolution, and Wiener filter deconvolution. In addition, Schwarz et al. (2017) introduced a physically motivated approach referred to as exponential decomposition. The approach was later narrowed down to typical bathymetric mapping situations with signal interaction at the surface, volume and bottom (Schwarz et al., 2019). This explicit model also addresses the fundamental problem of resolving very shallow water depths, where the returned signal peaks from surface and bottom overlap. This allows to measure ultra shallow water depths and provides a strictly seamless water-land transition. The topic of resolving very shallow water depths is also addressed in Yang, Qi et al. (2022), where a signal resolution enhancement model and a fractional differentiation mathematical tool are used together with Gaussian decomposition (Wagner et al., 2006) to measure water depths of less then 10cm.

An inherent problem in laser bathymetry is the restricted depth penetration capability due to (i) the general attenuation in water and (ii) water turbidity caused by floating or suspended sediment. Kogut and Bakula (2019) analyzed neighboring return waveforms to identify missing bottom points based on the prior knowledge of existing bottom points in the vicinity. To explicitly enhance the signal-to-noise ratio (SNR), Mader et al. (2022) propose a non-linear full waveform stacking technique. The authors first average neighboring return waveforms and subsequently identify surface and bottom points in the original waveforms within a restricted search corridor. With this method, an extra 30% of depth penetration is achieved without smoothing the resulting point clouds. Stacking and other full waveform processing methods are also reported in Steinbacher et al. (2021) along with the implementation in a bathymetric software suite.

4.4 Laser triangulation

Most of the bathymetric laser scanners operate based on the ToF measurement principle, i.e., the round-trip time of a laser pulse is measured and converted in to a distance. Especially for underwater close-range application, laser lightsheet triangulation is an alternative to ToF scanning. To operate laser triangulation in a hydrographic context, a green laser line is projected onto the object and the illuminated line or curve, respectively, is captured by a camera mounted at a fixed base with respect to the laser projector (Sardemann et al., 2022). The imaging system is installed inside a watertight housing and the sensors need to be placed oblique within the housing to obtain near-orthogonal ray intersection angles. Refraction effects at the air-glass interface inside and the glass-water interface outside the housing need to be considered to obtain precise 3D object coordinates.

One of the main advantages over ToF-based laser bathymetry is that highly accurate underwater measurements can be achieved at much lower costs. In the recent past, different implementations of bathymetric scanners based on the lightsheet triangulation principle have been described (Bleier et al., 2019; Xue et al., 2021; Lee et al., 2021), all of which feature sub-mm precision for a limited depth range of less than 50 cm. An exhaustive review of underwater scanners including but not limited to triangulation based scanners can be found in (Castillón, et al. 2019).

4.5 Hybrid methods

While multimedia photogrammetry and laser bathymetry constitute self-contained methods, SDB relies on external reference data for model calibration. For this purpose, SONAR data are often used for water areas beyond a wadeable depth of about 1.5m and otherwise terrestrial surveys (GNSS, total station). The advent of hybrid sensor systems combining laser scanners and cameras on the same platform now make it possible to use remote sensing based techniques for generating the reference data for training and calibration of respective SDB models. This specifically holds for crewed and uncrewed aerial platforms (Mandlburger, 2020). However, also satellite-based techniques benefit from the availability of spaceborne LiDAR sensors with bathymetric capabilities (Parrish et al., 2019).

Recent publications therefore use hybrid processing pipelines. Ji, Yang, Tang et al. (2022) use ALB point clouds for precise feature-based registration (orientation) of satellite images. Combinations of photo bathymetry and spectral depth estimation are published in Slocum et al. (2020) and Starek and Giessel (2017) based on aerial images only. Mandlburger et al. (2021) use the water surface and bottom models derived from topo-bathymetric LiDAR as reference for training, testing and validating a bathymetric CNN (BathyNet) to derive bathymetry from concurrently captured multispectral images (RGB+coastal blue). Such a trained network can potentially be used later for camera-only surveys. An example, where UAV-based photo bathymetry was used to train depth inversion models for satellite images is presented in Wang, Chen et al. (2022).

On a global scale, the combination of spaceborne LiDAR, specifically from the Advanced Topographic Laser Altimeter System (ATLAS) aboard ICESat-2 (Markus et al., 2017) and multispectral satellite images from Sentinel-2, Landsat-8, WorldView-2, Pleiades, etc. is increasingly used for SDB solely relying on remote sensing data. Examples for using ATALS data for calibration of spectral depth inversion models are published in Thomas et al. (2022), Le et al. (2022), Zhang, Chen, Le et al. (2022), Herrmann et al. (2022), Hartmann et al. (2021), Cao et al. (2021) and Le Quilleuc et al. (2022).

5. SENSORS AND PLATFORMS

Optical hydrographic methods are employed both on global and local scale. The measurement range varies from 800km to a few centimeters. The used sensor platforms are either operated from spaceborne, crewed or uncrewed airborne, terrestrial or underwater platforms. In the underwater case, the sensors are either carried by divers, remotely operated vehicles (ROV) or autonomous underwater vehicles (AUV). An overview of the platforms and scales used in optical hydrography is provided in Chemisky et al. (2021). The following subsections introduce a representative selection of sensors and platforms starting with satellite sensors (Section 5.1) via airborne sensors based on crewed (Section 5.2) and uncrewed (Section 5.3) platforms, to underwater sensors (Section 5.4).

5.1 Space-borne sensors

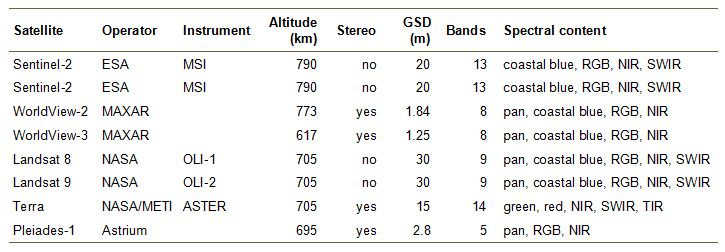

On a global scale, multispectral satellite images constitute the primary source for deriving hydrographic maps of the shallow water zone. Table 2 provides a list of frequently used multispectral satellites for both spaceborne multimedia photogrammetry and spectrally derived bathymetry.

The GSD reported in Table 2 refers to the multispectral bands. In addition, most sensors also provide a panchromatic channel often at higher spatial resolution. The pan channels are beneficial for photo bathymetry but do generally not add extra information for SDB. The provided spectral bands typically include a water-penetrating coastal blue channel in the ultra-violet domain of the spectrum (λ≈440nm), multiple visible channels (blue, green, red, red edge) as well as near infrared (NIR), short wave infrared (SWIR), and thermal infrared (TIR) channels. For deriving hydrographic products, the NIR channels provide the basis for sun glint corrections (Lyzenga et al., 2006) and the visible channels are employed for deriving bathymetry.

SDB based on multispectral satellite images constitute by far the most often employed use case. Reasons for the popularity include the open data access of some products (e.g., Sentinel-2, Landsat 8 etc.), the maturity of the technique, the narrow image FoV of typically much less then ±10° which spares refraction correction, and the fact that single images are a sufficient data basis. In the recent literature, applications have been reported in Le Quilleuc et al. (2022), Lawen et al. (2022), Herrmann et al. (2022), Almar et al. (2022), Zhang, Chen, Le et al. (2022), Zhang, Chen and Mao (2022), Xu, Zhou et al. (2022), Daly et al. (2022), Najar et al. (2022), Al Najar et al. (2022), Thomas et al. (2022), Niroumand-Jadidi et al. (2022a), Mudiyanselage et al. (2022), Almar et al. (2021), Al Najar et al. (2021), Wu et al. (2022), and Sonogashira et al. (2020).

In contrast to that, stereo images are required for multimedia photogrammetry, which narrows down the potential satellite choice (e.g. WorldView, Pleiades, Terra/ASTER). Still, also an increased interest can be observed, as this purely geometric technique is self-contained and does not require external reference data. Applications are, e.g., reported in Hodül et al. (2018) and Cao et al. (2019).

While the derivation of hydrographic products from spaceborne platforms has long been restricted to passive images, the advent of ICESat and its successor ICESat-2 (Markus et al., 2017; Neumann et al., 2019) changed this situation fundamentally. While the ATLAS aboard ICESat-2 does not provide full areal shallow water coverage at high spatial resolution, it perfectly complements existing multispectral instruments by providing reliable underwater reference topography on a per laser spot basis. This is especially useful for deep learning based SDB approaches which require abundant training data. This apparent advantage has already been used by several researchers (cf. Section 4.5).

ATLAS is a single-photon sensitive laser altimeter using green laser radiation (λ=532nm), thus, ideally suited for bathymetric purposes next to its prime application of capturing the Earth’s cryosphere. The LiDAR sensor contains six transmitters and corresponding receivers, which are aligned in three parallel lines. Three high energy lasers (pulse energy: 1.2mJ) are always paired with a corresponding low energy laser (strong:weak beam energy ratio=4:1). The pulse repetition frequency is 10kHz, which results in an along-track point spacing of 0.7m with a laser footprint diameter of 14m (i.e., along-track oversampling). The across-track spacing of the corresponding strong-weak laser pairs measures 90m and the spacing of the three pairs amounts to 3.3km (i.e. across-track undersampling). The revisit cycle is 91 days, thus enabling multi-temporal applications on a global scale. A thorough description and assessment of the bathymetric capabilities of ATLAS is published in Parrish et al. (2019). An spaceborne oceanographic LiDAR simulator is presented in Zhang, Chen and Mao (2022) highlighting that next to the commonly used green laser wavelength of 532nm, the use of lasers in the coastal blue and blue domain of the spectrum (λ=440/490nm) would achieve the greatest depth in oligotrophic seawater in the subtropical zone.

5.2 Airborne sensors

The classical application of optical hydrography is from crewed aircraft. All acquisition methods discussed in Sections 2–4 are employed from crewed airborne platforms. Any kind of metric camera, which is conventionally used for topographic applications and orthophoto production, can also be used for deriving hydrographic products. This especially applies to photogrammetric cameras providing a NIR channel alongside with the visible channels. Examples include the UltraCam camera series (Vecxel imaging) or the MFC150 camera (Leica Geosystems). Today, medium format cameras manufactured by PhaseOne are playing an increasingly important role due to their light weight and flexible integration options. The disadvantage of not featuring a 4-band RGBI product can be compensated by integrating two cameras with different spectral filters (Mandlburger et al., 2021).

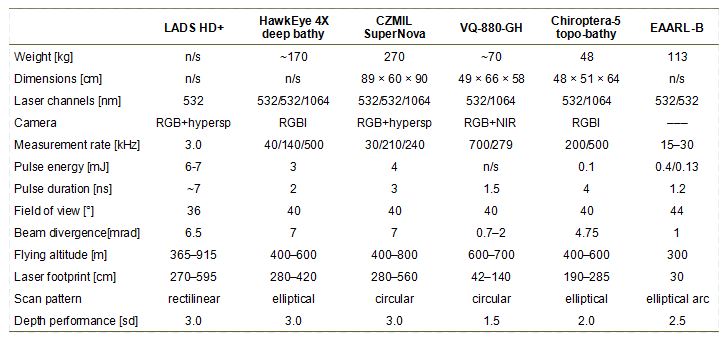

Airborne laser bathymetry sensors can be divided into: (i) deep bathymetric, (ii) shallow topo-bathymetric, and (iii) multi-purpose sensors. Deep bathymetric sensors aim at maximizing the penetration depth. They employ lasers with relatively long pulse duration of around 7ns and low measurement rate of 3–10kHz to achieve high pulse energy of approx. 7mJ. To comply with eye safety regulations, the beam divergence of such sensors is large (7mrad) resulting in a laser footprint diameter of 3–4m when operated at an altitude of 500m. Thus, a high depth penetration of typically 3sd comes at the prize of a moderate spatial resolution.

The so-called topo-bathymetric sensors focus on higher spatial resolution for capturing shallow inland and coastal water areas with high relief energy (rocks, boulders, sudden slope changes, etc.). They use short and narrow laser beams (pulse duration: 1–2ns, beam divergence: 0.7–2mrad) and higher pulse repetition rates of up to 700kHz resulting in laser footprint diameters of 0.5–1m on the ground and a point density of around 25 points/m2 in a single flight strip. The short pulse length enables separation of laser returns from water surface and bottom also for very shallow areas with water depths less than 20cm and thus a seamless transition between water and land. On the other hand, short pulse lengths also entail a lower pulse energy and consequently a lower depth penetration of typically 1.5sd.

In the recent years, multispectral and Single Photon LiDAR (SPL) scanners were introduced in the market. Both types of instruments are not specifically tailored for hydrographic mapping but, nevertheless, exhibit bathymetric capabilities (Fernandez-Diaz et al., 2016; Degnan, 2016).

Airborne multispectral laser scanners feature all three commonly used laser wavelengths (λ=532/1064/1550nm) and enable the derivation of vegetation indices facilitating point classification (Fernandez-Diaz et al., 2016). The main purpose of SPL is wide-area topographic mapping (Degnan, 2016), but due to the use of (i) a green laser and (ii) very sensitive detectors, this technology also comes with moderate bathymetric capabilities with a depth penetration performance of approx. 1sd. It is noted that Single Photon LiDAR technology is also used by the ATLAS instrument aboard the ICESat-2 satellite (Markus et al., 2017; Neumann et al., 2019; Parrish et al., 2019).

Traditional ALB systems utilize coaxial infrared and green laser beams for water surface and water bottom detection on a per pulse basis (Guenther et al., 2000; Wozencraft and Lillycrop, 2003; Fuchs and Tuell 2010). Other sensors use disjoint infrared and green lasers (cf. Figure 9). Such a design allows precise reconstruction of static water surfaces from the non-water penetrating infrared channel. Some modern ALB instruments, however, use green lasers only to detect both water surface and bottom (Wright et al., 2016; Pfennigbauer et al., 2011). This poses challenges for water surface modeling as the laser return signal from the water surface comprise intermingled components of specular reflections at the air-water interface and sub-surface volume backscattering. The derivation of precise water surface models from green-only scanners thus requires sophisticated data processing (Thomas and Guenther, 1990; Birkebak et al., 2018a,b; Schwarz et al., 2019).

Table 3 summarizes the specs of selected ALB scanner systems. The list contains examples for both deep and shallow topo-bathymetric sensors. The first instrument (LADS HD+) features a deep bathy channel only and the following two (HawkEye 4X, CZMIL SuperNova) contain both deep and shallow bathy channels. In all three cases, the parameters of the deep bathy channels are listed. The remaining instruments all constitute topo-bathymetric scanners containing one shallow water and an additional IR channel (Chiroptera-5, VQ-880-GH) or two shallow water channels (EAARL-B; McKean, Nagel et al., 2009). For these instruments the specs of the shallow bathy channels are reported. Except for the pulse repetition rate, the specs of topographic IR channels are not contained in Table 3. All reported values have been collected to the best of the author’s knowledge based on the manufacturer’s spec sheets and/or published papers.

Table 3 illustrate that the deep bathy sensors provide a high penetration depth of up to 3.0sd but moderate measurement rate of 3–40kHz resulting in a point density of ≤2 points/m2 and large footprint sizes in the range of 3–7m. The topo-bathymetric scanners, in turn, feature small footprint diameters in the sub-m range, higher measurement rates of up to 700kHz at the price of limited depth penetration (1.5sd). In addition to the laser scanners, most of the listed sensors also contain RGB or RGBI cameras. The images are mainly employed for photo documentation or as data basis for point cloud colorization, but the use of high-resolution metric cameras (e.g., RCD30, PhaseOne IXU, etc.) also opens the floor for bathymetry estimation via both photogrammetry or SDB. Respective use cases for using concurrently captured bathymetric LiDAR and multispectral images for SDB model calibration have already been discussed in Section 4.5.

Concerning vertical accuracy, all sensors listed in Table 3 meet one of the accuracy standards formulated by the International Hydrographic Organization (IHO, 2020). Especially the topo-bathymetric instruments are designed to comply to the rigorous versions of the standard like the Special Order specification requiring a Total Vertical Uncertainty (TVU) of 25cm for 95% of the measured bottom points and a Total Horizontal Uncertainty (THU) of 2m along with a 100% bathymetric coverage.

5.3 UAV-borne sensors

Until a few years ago, bathymetric laser scanners could only be operated from crewed platforms (aircraft, helicopters, gyrocopters) due to their considerable weight (cf. Table 3). With ongoing sensor miniaturization and progress in the development of uncrewed aerial platforms, compact laser scanners can now also be integrated on both fixed-wing and multi-rotor UAVs. Drones are typically operated from low flying altitude of about 50–120m above ground level and with moderate flying velocity of 4–10m/s entailing a significantly smaller laser footprint size as well as a higher point density and, thus, a higher spatial resolution compared to operation from crewed airborne platforms at higher altitudes. Furthermore, due to the shorter measurement range, signal attenuation in the atmosphere is also significantly lower and more signal strength is effectively available for penetrating the water body. This especially applies to UAV-borne bathymetric laser sensors but also plays a role for image derived bathymetry.

As light-weight cameras were available long before the advent of compact laser scanners, the use of UAV-cameras for photogrammetric mapping in general (Colomina and Molina, 2014) and hydrographic application in particular (Dietrich, 2016) have emerged earlier than UAV-borne laser bathymetry. As already stated in Section 5.2, all cameras which are suited for mapping topography are also suited for hydrography. While high-end camera systems including an IR channel in addition to the visible RGB are often available for crewed airborne platforms, this is rarely the case for UAV-images. However, ongoing research demonstrates that RGB images are an appropriate basis for both multimedia photogrammetry and SDB (He et al., 2021; Templin et al., 2018; Carrivick and Smith, 2019; Watanabe and Kawahara, 2016; Gentile et al., 2016; Shintani and Fonstad, 2017; Dietrich, 2016; Koutalakis and Zaimes, 2022; Rossi et al., 2020; Wang, Chen et al., 2022; Specht et al., 2022; Alevizos et al. 2022).

In most cases, multi-rotor UAV platforms are used for image-based hydrography with the distinct advantage of the versatility of such platforms (i.e., minimal space for starting/landing required, stop-and-go mode, arbitrary waypoint-based flight paths). In addition to that, fixed-wing UAVs are also in use (He et al., 2021; Escobar Villanueva et al., 2019; Templin et al., 2018). They feature longer flight endurance and therefore higher areal coverage. In general, the growing mass market for UAVs with image and video capabilities boosts the use of such consumer-grade instruments for hydrographic applications. A prominent example is the DJI Phantom 4 RTK drone featuring a 20 MPix RGB camera with a mechanical (global) shutter. While the Phantom 4 camera is not metric in the strict sense, the inner orientation is sufficiently stable to allow the derivation of 3D point clouds above and below the water table.

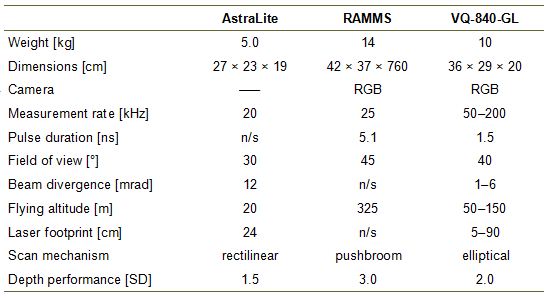

In the recent past, the advent of bathymetric laser scanners integrated on UAVs with a maximum take-off mass (MTOM) below 35kg can be seen as another major leap in the field of airborne laser bathymetry with respect to spatial resolution as well as depth performance. Just as the advent of shallow-water topo-bathymetric scanners in addition to traditional deep-water sensors has increased spatial resolution, UAV-based topo-bathymetric scanners have boosted achievable point density by another order of magnitude. The laser footprint diameter of modern UAV-borne bathymetric scanners is in the sub-dm range, and together with point densities in the order of 100–200 points/m2 this not only enables mapping of submerged topography in high details but also allows detection and modeling of flow-relevant micro-structures like small boulders.

To date, only a few UAV-borne bathymetric laser scanners are available. The ASTRALiTe sensor (Mitchell and Thayer, 2014) is a scanning polarizing LiDAR. The sensor uses a 30mW laser, is typically operated from low flying altitude of around 20m above ground level and therefore provides limited areal measurement performance. Also the depth performance of 1.2sd is moderate, but the small weight allows integration on many commercially available multi-rotor UAV platforms. The RAMMS (Rapid Airborne Multibeam Mapping System, (Mitchell, 2019; Ventura, 2020) features a remarkable depth penetration of 3sd and strictly avoids moving parts. With every laser shot, the sensor emits an entire laser line, which is captured by multiple receivers. The concept therefore resembles the principle of multibeam echo sounding with a single ping and multiple transducers. The instrument weighs 14kg and is rather designed for integration on light aircraft and helicopters but can also be mounted on powerful UAV platforms. The same also applies to the VQ-840-GL (weight: 10kg), where beam deflection is realized with a rotating mirror. The scanner features a user definable beam divergence and receiver’s FoV allowing to balance depth measurement performance (≥2sd) and spatial resolution (Mandlburger et al., 2020). The main parameters of the described instruments are summarized in Table 4.

5.4 Underwater sensors

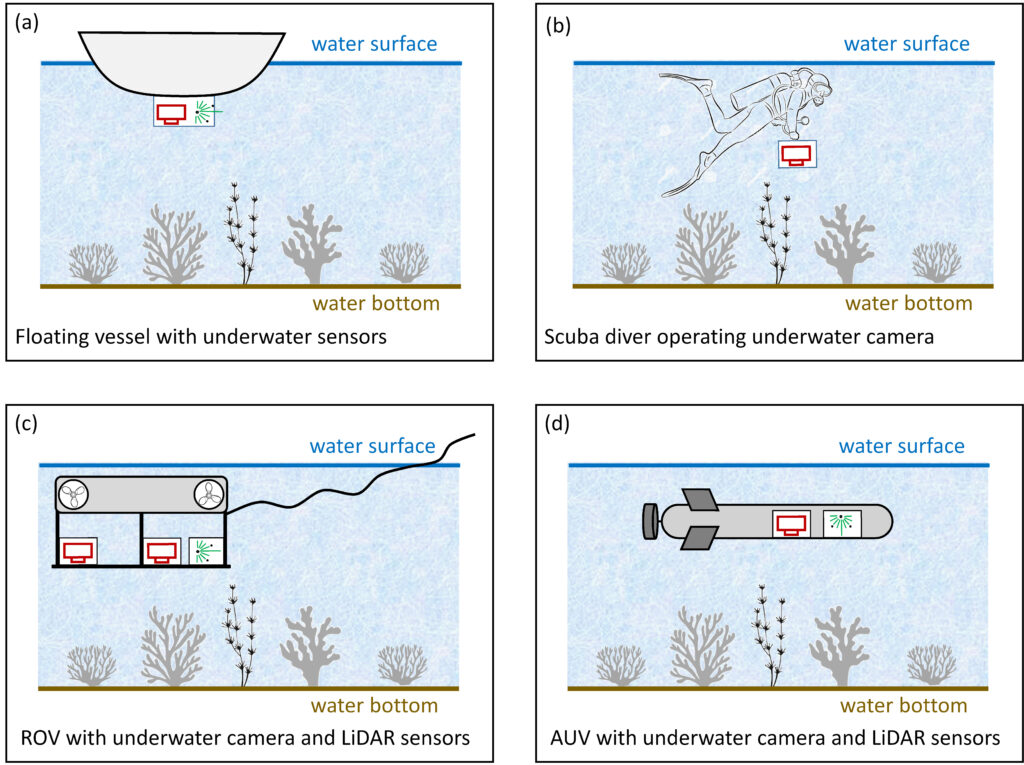

Although the article mainly focuses on optical hydrographic methods, where the sensor is located above the water table, the following section contains a brief discussion of underwater sensors and platforms. In general, the four different scenarios schematically sketched in Figure 11 can be distinguished: (a) the vessel is floating on the water surface and the imaging sensors (cameras and/or laser scanners) are located at the bottom of the vessel within a watertight housing, (b) a scuba diver is manually operating a single camera or a stereo camera rig, (c) imaging sensors are integrated on a remotely operated vehicle (ROV) with a wire-based communication link, and (d) imaging sensors are installed on an autonomous underwater vehicle (AUV).

In any case, the distance between sensor and target is relatively small, which allows mapping objects in very high spatial resolution but poses additional challenges for sensor orientation. Accept for the floating vessel case, not only the sensor but also the platform is entirely under water, thus, GNSS is not available for positioning the sensor. In the GNSS-denied case, image orientation is accomplished either via control points (Maas 2015, Cahyono et al. 2020), visual odometry (Botelho et al., 2010), or Simultaneous Localization and Mapping (SLAM) techniques (Barkby et al., 2009; Massot-Campos et al. 2016; Ma et al., 2020). Under water, imaging sensors can either be handheld by a scuba diver or mounted on a ROV or AUV, respectively. The use of ROVs for underwater inspection is becoming increasingly widespread with applications in mapping and monitoring of off-shore facilities and hydro-power plants. A review of inspection-based ROVs is published in Capocci et al. (2017). Besides ROVs, fully autonomously operating underwater vehicles are also rapidly developing. They are already used for mapping large areas of the seafloor in depths of several thousand meters. In an early review, Bellingham (2009) describes the principle of operation and navigation of AUV platforms. Considering the absence of GNSS in underwater areas, localization and navigation mainly relies on inertial navigation and SLAM (Sahoo et al., 2019). Next to hydroacoustic sensors, AUVs also integrate optical imaging sensors like lasers and stereo cameras, but application is hampered among other things by the absence of light and by constraints concerning energy consumption. Despite these difficulties, high resolution mapping was already successfully conducted for square kilometers of deep ocean floor (Kwasnitschka et al., 2016).

While spectral methods are rarely used in underwater photogrammetry, the application of SfM-based photo bathymetry is widespread. Both mirrorless cameras (Sony a1, Sony a7R, Olympus E-PL10, Nikon Z7, Canon EOS R5, etc.) as well as digital single-lens reflex (DSLR) cameras (Canon EOS Rebel SL3, Nikon D850/780/500) are in use. In any case, the cameras need to be operated within a waterproof housing, for which purpose flat and dome ports can be employed. The differences between both housings concepts are analyzed and discussed in Menna et al. (2017b,a).

Next to stereo-photogrammetry, laser scanning is also used under water. Due to eye safety, scanners are predominantly integrated on ROVs and AUVs. Underwater laser scanners are operated using (i) the ToF measurement principle based on pulsed green lasers, (ii) triangulation based on structured light, and (iii) frequency modulation. More detailed reviews of under water laser scanning can be found in Filisetti et al. (2018) and Massot-Campos and Oliver-Codina (2015).

6. APPLICATIONS

The applications of SDB, photo and laser bathymetry are manifold and more use case scenarios are currently emerging due to the tremendous progress in sensor and platform technology. Especially sensor miniaturization and the introduction of remotely piloted or even autonomously operating platforms open new possibilities for mapping, inspection, monitoring and documentation of underwater topography, artifacts, and infrastructure. It is beyond the scope of this paper to address all application fields, but exemplary use cases are discussed in the following subsections.

6.1 Large-area shallow water mapping

Optical methods are well suited for mapping shallow water areas with moderate depths of less than 60m. The most effective technique for large-area mapping is satellite derived bathymetry. Daly et al. (2022), for example, report about mapping of a 4000km stretch along the West African coast based on Sentinel-2 multispectral images up to a depth of 35m also featuring details like ebb delta lobes and underwater dunes. A global approach of satellite-based coastal bathymetry was published in Almar et al. (2021). The authors claim that the seafloor could be resolved up to depth of 100 m covering most continental shelves with an area of 4.9 million km2. While the depth accuracy of 6–9m is moderate, the global coverage is of particular interest for countries which do not have the possibility to carry out in-situ measurements.

Next to SDB, also airborne laser bathymetry based on crewed aircraft can provide large-area coverage with a much higher vertical accuracy complying with strict IHO standards (IHO, 2020). Many reports are available highlighting the potential of ALB for wide-area mapping with meter resolution and sub-meter vertical accuracy for coastal areas, e.g., in the Baltic Sea (Song et al., 2015; Ellmer, 2016) to name just one example. The widespread use of bathymetric LiDAR is also documented by the availability of (open) data archives, e.g. managed and maintained by NOAA (National Oceanic and Atmospheric Administration) in the U.S.A. (NOAA, 2022).

6.2 Ship navigation

For navigational purposes, high positional and vertical accuracy as well as full areal coverage is required. In general, satellite based techniques based on multispectral images do not fulfill the stringent IHO requirements and airborne laser bathymetry is therefore the method of choice for nautical applications where safety and ease of navigation are paramount. Precise nautical charts are an indispensable prerequisite to enable safe ship navigation in both coastal environments (Wozencraft and Millar, 2005) as well as for navigable inland rivers. For the latter, high spatial resolution is required in addition to the high accuracy requirements, which is why topo-bathymetric laser scanners are the first choice. An application example at the Elbe River in Germany is published in Kühne (2021) based on methods and software described in Steinbacher et al. (2021).

6.3 Archaeology and cultural heritage

With the rise of the sea level, many archaeological sites are now submerged. This especially applies to the Roman age, where traces (ancient harbors, etc.) are found in the Mediterranean Sea. For 3D reconstruction of submerged structures, relatively high spatial resolution is necessary, for which reason topo-bathymetric LiDAR and multimedia stereo-photogrammetry are the preferred techniques. Doneus et al. (2013, 2015), for example, used laser bathymetry to record the remnants of a Roman villa in Adriatic sea in Coratia and to map traces of a late Neolithic dwelling in an Austrian freshwater lake. If higher spatial resolution than the dm level is required, close-range underwater photogrammetry is the method of choice. Drap (2012) published a book chapter related to the application of underwater photogrammetry in archaeology. Furthermore, the fusion of photogrammetric datasets from both above and below the water surface is described in Nocerino and Menna (2020) using the Costa Concordia shipwreck as a prominent example.

6.4 Coral reef mapping and monitoring

Another parade application of underwater photogrammetry is mapping of coral reefs. Corals have a very complex 3D structure that can usually only be fully captured using close-range techniques. Since coral reefs are very fragile ecosystems, monitoring their growth is an important issue in the context of climate change. Today, coral reef mapping is typically done with SfM-based methods (Cahyono et al., 2020) with stereo or multi-camera rigs carried by scuba divers (Nocerino et al., 2020). Photogrammetric data processing is often based on standard SfM software (Burns and Delparte, 2017). If the highest resolution and accuracy are not required, through-water photobathymetry using UAVs as carrier platforms can also be used (Casella et al., 2022). As an alternative to multimedia photogrammetry, airborne laser bathymetry is also suitable for mapping coral reefs (Wilson et al., 2019) with UAV-based bathymetric LiDAR being best (Wilson et al., 2019; Wang, Xing et al., 2022).

6.5 Coastal protection and monitoring

With more than 200 million people living along coastlines that are less than 5m above sea level, there is an obvious need for mapping coastal areas with a focus on protecting this sensitive transition zone between sea and land. The methods of choice are SDB when global coverage and frequent updates are more important than high spatial resolution. In the latter case, laser bathymetry is widely used and some countries even introduced mapping programs for coastal protection and monitoring at the federal level with regular update cycles, e.g., Schleswig-Holstein in Germany (Christiansen, 2016, 2021). In the U.S.A., coastal change monitoring has been conducted for decades using the Compact Hydrography Airborne Rapid Total Survey (CHARTS) system, which includes bathymetric and topographic laser scanners and aerial cameras (Macon, 2009).

6.6 Benthic habitat mapping

Another important application of optical methods in hydrography in addition to charting the bottom topography is mapping benthic habitats. For this purpose all three techniques (SDB, photo bathymetry, laser bathymetry) are suited and employed. For example, Wedding et al. (2008) used laser bathymetry to estimate substrate rugosity, which proved to be a good predictor of fish biomass. High resolution topo-bathymetric LiDAR was the prime data source used in Parrish et al. (2016) to map sea grass and estimate the impact of hurricane Sandy. Sea grass mapping based on topo-bathymetric LiDAR was also the focus in Letard et al. (2021). Mandlburger et al. (2015) investigated the use of topo-bathymetric LiDAR to map instream micro- and mesohabitats of a near natural river and their changes in response to flood events. In a recent study, Letard et al. (2022) use green and IR laser data to map and classify estuarine habitats and produced 3D maps of 21 land and marine cover types at very high resolution.

High resolution WorldView-2 satellite images served as basis for mapping sea grass in Su and Gibeaut (2013). Based on multispectral satellite images, Salavitabar and Li (2022) were able to provide a high resolution data basis for the restoration of fish habitats, and Legleiter and Hodges (2022) used multispectral aerial and satellite images to map algal density variations in shallow, clear-flowing rivers using the band ratio algorithm (Legleiter et al., 2009). Finally, various applications of SfM based on close-range UAV images for mapping fluvial habitats are described in Carrivick and Smith (2019).

6.7 Post-disaster documentation

Optical methods have proven successful in documenting the effects of disasters such as hurricanes, tsunamis, and floods. On a global scale, and when rapid response is required, satellite imagery is best because of the regular review cycle. However, the morphological changes triggered by disasters are often small-scale and therefore require high-resolution techniques such as photo bathymetry or laser bathymetry. Topo-bathymetric LiDAR was used, for example, to assess the impact of hurricane Sandy on benthic habitats (Parrish et al., 2016) and to estimate the impact of a 30-years flood event on fish habitats at a pre-Alpine gravel bed river (Mandlburger et al., 2015). In addition to airborne laser bathymetry, various UAV-based remote sensing techniques can be used for marine monitoring, including disaster documentation (Yang, Yu et al., 2022). The use of small UAVs as carrier platforms has the disadvantage that only moderate area coverage is achieved due to limited flight time. However, the versatility and low mobilization costs are clear advantages that enable rapid production of disaster maps with a high level of detail.

6.8 Infrastructure mapping and inspection

With the increase in offshore installations (oil and gas, wind turbines, etc.), the inspection, mapping and monitoring of underwater infrastructure is becoming increasingly important. The same is true for hydro-power plants. For accessing the survey objects, ROVs as well as AUVs are used (Capocci et al., 2017). Close-range multimedia photogrammetry is commonly used for the task of precise underwater infrastructure mapping as detailed in Chemisky et al. (2021). However, next to stereo cameras, also different types of laser scanners are employed (Filisetti et al., 2018; Massot-Campos and Oliver-Codina, 2015). In general, different approaches are required for infrastructure inspection and monitoring depending on the application, as sometimes very high sub-mm precision is required to check the shape of a turbine blade, for example, while in other cases it may only be necessary to check for the presence of an obstruction (i.e., classifying an image or image sequence).

7. SUMMARY AND CONCLUSIONS

This article provided an overview of optical methods in hydrography and a discussion of the sensors, platforms and typical applications. The established methods are (i) spectrally derived bathymetry, (ii) multimedia stereo photogrammetry, and (iii) laser bathymetry. All three methods can be operated from space-based, crewed and uncrewed airborne, and underwater platforms.

The spectral method is predominantly used based on multispectral satellite images with the inherent advantage of providing global coverage. For this method, external reference data for calibre-ting the physics-or regression-based models are necessary. Today, machine learning techniques increasingly replace traditional depth inversion methods.

Crewed aircraft is the platform of choice for operating laser bathymetry. This active remote sen-sing technique provides good depth penetration of about three times Secchi depth, efficient area coverage with the intrinsic advantage that swath width does not depend on water depth but only on flight altitude, and excellent position and height uncertainty that even meets the stringent specifications of the International Hydrographic Organization. The latter especially applies to the shallow water channels of modern topo-bathymetric laser scanners, which provide high spatial resolution in the sub-meter domain and a depth precision in the dm-range at the prize of a reduced depth performance. Thanks to advances in sensor and platform technology, bathymetric laser scanners can now be integrated onto UAVs, providing sub-dm spatial resolution and accuracy.

Multimedia photogrammetry, on the other hand, is mostly used in underwater surveying, i.e. both the objects and the sensor are located below the water surface. In this case, the sensors are located in waterproof housings that are either flat or spherical. Of all optical methods, multimedia photogrammetry has the longest history, dating back to a seminal work in 1948. Today, methodological development is driven by Computer Vision, which led to the introduction of Structure from Motion (SfM) and Dense Image Matching (DIM) in stereo photogrammetry in general and multimedia photogrammetry in particular.

Optical methods are limited in terms of measuring distance due to the strong light attenuation in the medium water. In addition to the pure clear water attenuation, turbidity further hampers depth penetration. The maximum achievable depth is around 60–75m in very clear water. Therefore optical methods are limited to shallow water when captured from air or space, or to use cases where the sensor is underwater and close to the object. As mentioned earlier, echo sounding is less efficient in shallow waters and operation is even dangerous in very shallow waters compared to aerial optical methods. Thus, optical and hydroacoustic methods do not compete with each other so much as they are synergistic.

A bibliographic review has shown that the number of publications in the field of spectrally derived, photo and laser hydrography has increased by an order of magnitude over the past decade and has now reached a level of around 200 publications per year. One of the reasons for this is the increasing availability of open data. This especially applies to space-borne images from multispectral satellites like Sentinel-2 or Landsat 9 and space-borne laser data from the ATLAS instrument aboard ICESat-2. Another reason is the rise of machine learning, which is about to become a standard tool for processing active and passive imaging data for hydrographic purposes. A third reason is the advent of low-cost UAVs as carrier platforms for local airborne hydrographic surveys. Finally, the availability of consumer-grade yet affordable sensors has further invigorated the field of both through-water and underwater data acquisition.

In summary, optical methods are an efficient alternative to traditional hydroacoustic surveys in shallow waters. Both techniques complement each other with regard to their respective fields of application. For the future of optical methods in hydrography, it is foreseeable that continuous advances in sensor and platform technology on the one hand and advances in processing methods and computer performance on the other will further improve the quality of the derived products and also open up new fields of research. Especially in times of climate change, multi-temporal analyses will play an increasing role. This is already well established for space-based data with corresponding data archives, but needs to be extended to local high-resolution data from airborne, UAV-based and underwater platforms.

8. REFERENCES

Abdallah, H., Baghdadi, N., Bailly, J.-S., Pastol, Y. and Fabre, F. (2012). Wa-LiD: A New LiDAR Simulator for Waters. IEEE Geoscience and Remote Sensing Letters Geosci. Remote Sensing Lett. 9(4), pp. 744–748. http://dx.doi.org/10.1109/LGRS.2011.2180506

Agisoft (2022). Metashape – Photogrammetric processing of digital images and 3D spatial data generation. http://www.agisoft.com

Agrafiotis, P., Karantzalos, K., Georgopoulos, A. and Skarlatos, D. (2020). Correcting Image Refraction: Towards Accurate Aerial Image-Based Bathymetry Mapping in Shallow Waters. Remote Sensing 12(2). https://www.mdpi.com/2072-4292/12/2/322

Agrafiotis, P., Karantzalos, K., Georgopoulos, A. and Skarlatos, D. (2021). Learning from Synthetic Data: En-hancing Refraction Correction Accuracy for Airborne Image-Based Bathymetric Mapping of Shallow Coastal Waters. PFG – Journal of Photogrammetry, Remote Sensing and Geoinformation Science 89(2), pp. 91–109. https://doi.org/10.1007/s41064-021-00144-1

Agrafiotis, P., Skarlatos, D., Georgopoulos, A. and Karantzalos, K. (2019). DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds. Remote Sensing 11(19). https://www.mdpi.com/2072-4292/11/19/2225

Al Najar, M., El Bennioui, Y., Thoumyre, G., Almar, R., Bergsma, E. W. J., Benshila, R., Delvit, J.-M. and Wilson, D. G. (2022). A combined color and wave-based approach to satellite derived bathymetry using deep learning. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences XLIII-B3-2022, 9–16. https://www.int-arch-photogramm-remote-sens-spatial-inf-sci.net/XLIII-B3-2022/9/2022/

Al Najar, M., Thoumyre, G., Bergsma, E. W. J., Almar, R., Benshila, R. and Wilson, D. G. (2021). Satellite derived bathymetry using deep learning. Machine Learning. https://doi.org/10.1007/s10994-021-05977-w

Alevizos, E., Oikonomou, D., Argyriou, A. V. and Alexakis, D. D. (2022). Fusion of Drone-Based RGB and Multi-Spectral Imagery for Shallow Water Bathymetry Inversion. Remote Sensing 14(5). https://www.mdpi.com/2072-4292/14/5/1127

Allouis, T., Bailly, J.-S., Pastol, Y. and Le Roux, C. (2010). Comparison of LiDAR waveform processing methods for very shallow water bathymetry using Raman, near-infrared and green signals. Earth Surface Processes and Landforms 35(6), pp. 640–650. https://onlinelibrary.wiley.com/doi/abs/10.1002/esp.1959